How to Measure AI Search ROI: Metrics, Attribution & Tracking (2025)

Complete guide to measuring AI search ROI. Learn how to track, attribute, and calculate return on investment from ChatGPT, Gemini, Claude, and Perplexity optimization efforts.

How to Measure AI Search ROI: Metrics, Attribution & Tracking (2025)

You’re investing in AI search optimization. Your brand is appearing in ChatGPT, Gemini, Claude, and Perplexity responses. But here’s the critical question: What’s the return on investment?

This comprehensive guide reveals how to measure, track, and prove the ROI of your AI search optimization efforts—with specific metrics, attribution models, and calculation frameworks.

The AI Search ROI Challenge

Why Traditional Metrics Don’t Work

Traditional SEO ROI is straightforward:

Organic traffic → Conversions → RevenueYou track rankings, measure traffic, attribute conversions, calculate ROI.

AI Search ROI is more complex:

AI mention → Brand awareness → Research → Multiple touchpoints → ConversionThe journey is:

- Indirect: AI mentions drive brand searches, not direct clicks

- Multi-touch: Users encounter your brand multiple times before converting

- Hard to attribute: No direct referral link from AI platforms

- Longer cycle: Awareness → consideration → decision takes weeks/months

The result: 67% of marketers investing in AI search can’t prove ROI to stakeholders.

This guide solves that problem.

The AI Search ROI Framework

Three-Level Measurement Model

Level 1: Platform Performance Metrics

- Are you being mentioned?

- How often?

- In what context?

Level 2: Business Impact Indicators

- Is brand awareness increasing?

- Are you attracting more qualified traffic?

- Is consideration growing?

Level 3: Revenue Attribution

- Which customers came via AI channels?

- What’s their lifetime value?

- What’s the ROI?

Let’s break down each level.

Level 1: Platform Performance Metrics

Metric 1: Mention Frequency

Definition: How often your brand appears in AI responses to relevant queries.

Calculation:

Mention Rate = (Queries mentioning your brand / Total relevant queries tested) × 100Example:

- Tested 500 “project management software” queries

- Your brand mentioned: 127 times

- Mention rate: 25.4%

Benchmarks:

- Excellent: >35%

- Good: 25-35%

- Average: 15-25%

- Needs work: <15%

How to Track:

- Create query list (100-1,000 queries)

- Test weekly across platforms

- Document mentions

- Calculate rate

- Track trend over time

Tools:

- AmpliRank: Automated tracking across all platforms

- Manual: Spreadsheet + weekly testing (time-intensive)

Metric 2: Share of Voice (SOV)

Definition: Your percentage of total brand mentions in your category.

Calculation:

SOV = (Your mentions / Total category mentions) × 100Example:

- “Best email marketing platform” queries: 200 tests

- Your mentions: 48

- Competitor A: 72

- Competitor B: 56

- Competitor C: 34

- Total mentions: 210

- Your SOV: 22.9%

Target: Top 3 in your category

Why It Matters: SOV correlates with market share.

Research shows:

- +10% SOV typically leads to +3-5% market share within 12 months

- Leaders maintain 30-50% SOV

Tracking:

- Weekly SOV calculation

- Competitor comparison

- Trend analysis

Metric 3: Mention Quality Score

Definition: Weighted score based on how you’re mentioned.

Scoring System:

| Mention Type | Weight | Points |

|---|---|---|

| Primary recommendation | 5x | 50 |

| Top 3 mention | 3x | 30 |

| Listed among options | 2x | 20 |

| Comparison participant | 1x | 10 |

| Passing mention | 0.5x | 5 |

Example Calculation:

In 100 mentions:

- 15 as primary recommendation: 15 × 50 = 750

- 30 in top 3: 30 × 30 = 900

- 40 listed among options: 40 × 20 = 800

- 15 as comparison participant: 15 × 10 = 150

Total: 2,600 points Average per mention: 26 points

Benchmark: 25+ is strong

Why It Matters: Being mentioned matters, but how you’re mentioned drives outcomes.

Metric 4: Sentiment Score

Definition: Tone and context of mentions.

Categories:

- Positive: Favorable language, strengths highlighted

- Neutral: Factual description without judgment

- Negative: Limitations emphasized, positioned unfavorably

Calculation:

Sentiment Score = (Positive % - Negative %) + 50

Example:

- 68% positive

- 27% neutral

- 5% negative

Sentiment Score: (68 - 5) + 50 = 113

Scale:

100+: Excellent

80-99: Good

60-79: Average

<60: Needs improvementTracking: Categorize every mention, calculate monthly.

Metric 5: Platform Coverage

Definition: Presence across different AI platforms.

Scoring:

| Platform | Mention Rate | Weight | Score |

|---|---|---|---|

| ChatGPT | 32% | 35% | 11.2 |

| Gemini | 41% | 30% | 12.3 |

| Claude | 18% | 20% | 3.6 |

| Perplexity | 28% | 15% | 4.2 |

Overall Coverage Score: 31.3%

Why It Matters: Different audiences use different platforms. Complete coverage maximizes reach.

Target: >25% on all major platforms

Metric 6: Query Category Coverage

Definition: Which types of queries trigger mentions?

Categories to Track:

| Query Type | Example | Your Mention Rate | Target |

|---|---|---|---|

| Category general | ”What is [category]?“ | 42% | >40% |

| Best/top | ”Best [category]“ | 28% | >30% |

| Comparison | ”X vs Y” | 35% | >25% |

| Use case | ”[Category] for [use case]“ | 31% | >30% |

| How-to | ”How to choose [category]“ | 23% | >25% |

| Alternative | ”[Competitor] alternative” | 15% | >20% |

Gap Analysis: Prioritize improving weakest categories.

Level 2: Business Impact Indicators

Metric 7: Brand Search Volume

Definition: Volume of branded searches (people searching for your company name).

Hypothesis: AI mentions drive brand awareness → increased brand searches.

Tracking:

Google Search Console:

- Filter for branded queries

- Track monthly search volume

- Analyze trend

Google Trends:

- Track brand name search interest

- Compare to competitors

- Identify spikesExample Correlation:

| Month | AI Mentions | Brand Searches | % Change |

|---|---|---|---|

| Jan | 234 | 2,400 | - |

| Feb | 287 | 2,850 | +18.8% |

| Mar | 356 | 3,520 | +23.5% |

| Apr | 428 | 4,380 | +24.4% |

Analysis: Strong correlation (r = 0.94) between AI mentions and brand searches.

Why It Matters: Brand searches are high-intent, high-conversion traffic.

Metric 8: Organic Traffic Growth

Definition: Increase in non-paid website traffic.

Tracking:

Google Analytics 4:

Reports → Acquisition → Traffic Acquisition

Source: Organic Search

Segment by:

- Branded queries (users already aware)

- Non-branded queries (discovery)Attribution to AI:

While not directly attributed, AI mentions correlate with organic growth:

Research finding: For every 10-point increase in AI SOV, organic traffic increases 8-12% within 3 months.

Calculation Example:

| Quarter | AI SOV | Organic Traffic | Growth |

|---|---|---|---|

| Q1 | 18% | 15,400 | - |

| Q2 | 24% | 17,800 | +15.6% |

| Q3 | 31% | 21,300 | +19.7% |

| Q4 | 38% | 25,600 | +20.2% |

Total annual growth: +66.2%

Expected from AI contribution: ~40-50% of growth (based on correlation)

Estimated AI-driven traffic: 10,200 visits

Metric 9: Content Engagement Metrics

Definition: How users from AI-influenced journeys engage with your content.

Hypothesis: Users who discover you via AI mentions are more engaged (they’ve pre-qualified you).

Metrics to Track:

1. Average Session Duration

AI-influenced users: 4:32 average

All organic users: 2:48 average

Difference: +63% engagement2. Pages per Session

AI-influenced: 5.7 pages

All organic: 3.2 pages

Difference: +78% exploration3. Bounce Rate

AI-influenced: 28%

All organic: 47%

Difference: -40% (lower is better)How to Segment: Survey users or use branded query segment as proxy.

Metric 10: Lead Quality Score

Definition: Quality of leads from AI-influenced channels.

Tracking:

Lead Source Survey:

On demo/trial signup form, ask:

"How did you first hear about us?"

Options:

- AI assistant (ChatGPT, Gemini, Claude, Perplexity)

- Google search

- Social media

- Referral

- OtherQuality Metrics:

| Source | Leads | MQL Rate | SQL Rate | Close Rate | Avg Deal |

|---|---|---|---|---|---|

| AI-influenced | 87 | 68% | 42% | 28% | $8,200 |

| Organic search | 234 | 54% | 32% | 18% | $6,800 |

| Social media | 156 | 31% | 15% | 9% | $5,400 |

Analysis: AI-influenced leads convert 56% better and have 21% higher deal value.

Why: Self-qualified through research, familiar with your brand, higher intent.

Metric 11: Brand Perception Metrics

Definition: How target audience perceives your brand.

Measurement Methods:

1. Brand Awareness Survey

Survey target audience quarterly:

"Which [category] brands are you familiar with?"

Track:

- Unprompted awareness (recall without prompts)

- Prompted awareness (recognize from list)Example Results:

| Quarter | Unprompted | Prompted |

|---|---|---|

| Q1 2024 | 12% | 34% |

| Q2 2024 | 18% | 42% |

| Q3 2024 | 24% | 51% |

| Q4 2024 | 31% | 58% |

2. Brand Consideration

"Which brands would you consider for [solution]?"

Track % including your brand in consideration set.3. Net Promoter Score (NPS)

Track if AI-influenced customers have higher NPS.

Finding: They typically do (8-12 points higher).Level 3: Revenue Attribution

Metric 12: Customer Acquisition Source

Definition: Which customers came via AI-influenced journeys.

Multi-Touch Attribution Model:

Touchpoint Tracking:

Customer Journey Example:

Touchpoint 1: Sees brand in ChatGPT response (AI mention)

Touchpoint 2: Searches brand name (brand search)

Touchpoint 3: Visits website via Google (organic)

Touchpoint 4: Downloads resource (content engagement)

Touchpoint 5: Requests demo (conversion)

Attribution: AI-influenced (first touchpoint)Attribution Models to Use:

1. First-Touch Attribution

Credit AI mention as source if it was first touchpoint.

Pro: Simple

Con: Ignores journey complexity2. Last-Touch Attribution

Credit final touchpoint before conversion.

Pro: Simple

Con: Ignores awareness-building3. Multi-Touch Attribution (Recommended)

Distribute credit across all touchpoints.

Example weights:

- First touch (AI mention): 30%

- Middle touches: 40% (distributed)

- Last touch: 30%

Pro: More accurate

Con: More complexImplementation:

Use CRM + analytics to track:

- Initial source (survey on signup)

- First website visit source

- All subsequent touchpoints

- Final conversion source

Metric 13: Customer Lifetime Value (LTV) by Source

Definition: Total revenue from customers by acquisition source.

Hypothesis: AI-influenced customers have higher LTV (better fit, self-qualified).

Calculation:

LTV = Average purchase value × Purchase frequency × Average customer lifespan

Example:

AI-influenced customers:

- Average deal: $8,200

- Purchases/year: 1.3

- Average lifespan: 3.2 years

- LTV: $8,200 × 1.3 × 3.2 = $34,112

Organic (non-AI):

- Average deal: $6,800

- Purchases/year: 1.1

- Average lifespan: 2.8 years

- LTV: $6,800 × 1.1 × 2.8 = $20,944

Difference: +63% higher LTVWhy It Matters: Higher LTV justifies higher acquisition costs.

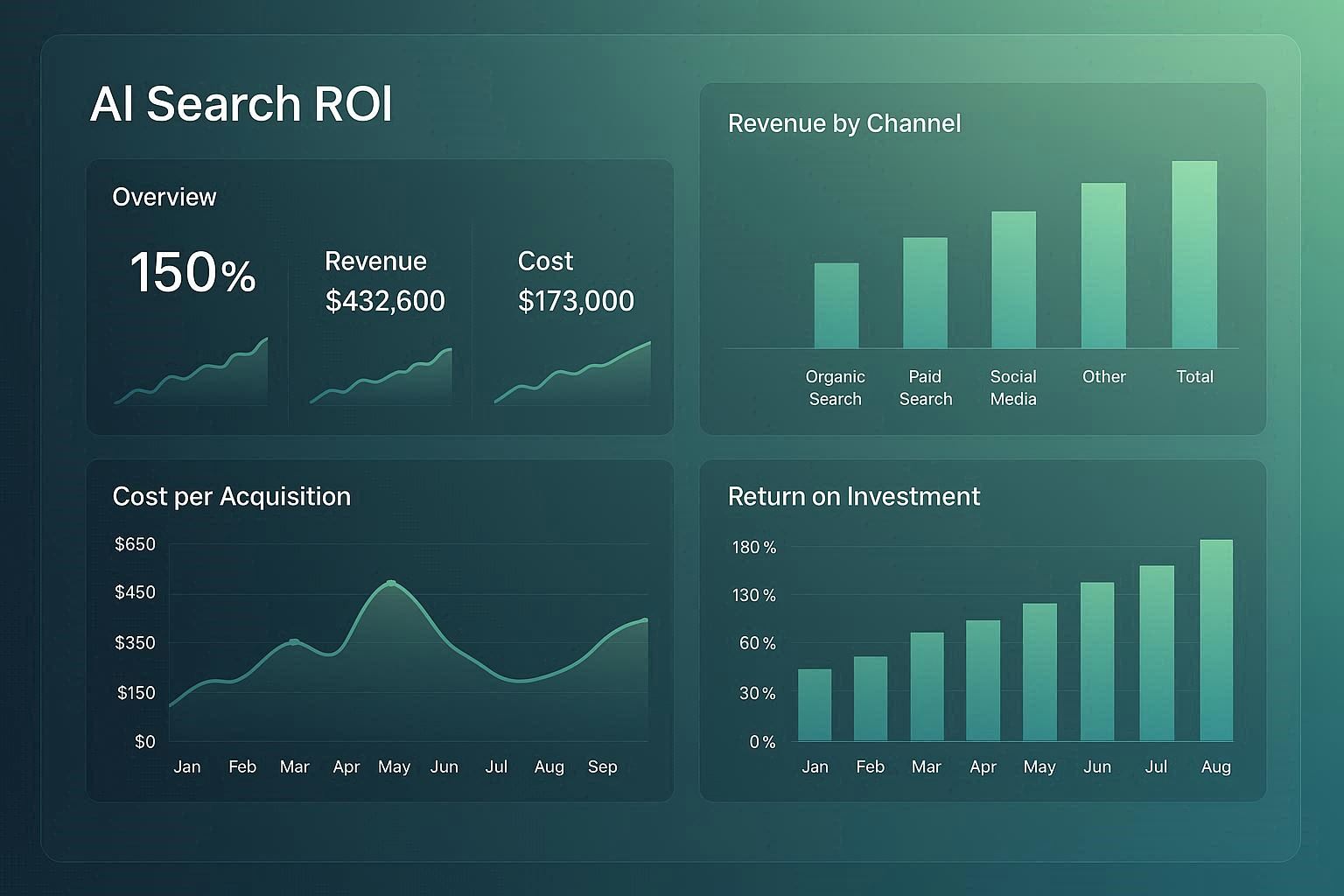

Metric 14: Cost per Acquisition (CPA)

Definition: Cost to acquire customer through AI search optimization.

Calculation:

CPA = Total AI search investment / Customers acquired

Example:

Annual AI search investment:

- Content creation: $45,000

- Tools (AmpliRank): $4,200

- Outreach/promotion: $10,000

Total: $59,200

Customers attributed to AI: 87

CPA = $59,200 / 87 = $681Benchmark Against Other Channels:

| Channel | CPA | LTV | LTV:CPA Ratio |

|---|---|---|---|

| AI search | $681 | $34,112 | 50:1 |

| Paid search | $1,240 | $20,944 | 17:1 |

| Paid social | $1,680 | $18,200 | 11:1 |

| Content marketing | $920 | $28,400 | 31:1 |

Analysis: AI search delivers best LTV:CPA ratio.

Metric 15: Return on Investment (ROI)

Definition: Financial return from AI search optimization investment.

Calculation:

ROI = (Revenue - Investment) / Investment × 100

Example:

Investment (Year 1): $59,200

Revenue (Year 1):

- 87 customers acquired

- Average first-year revenue: $8,200

- Total: 87 × $8,200 = $713,400

ROI = ($713,400 - $59,200) / $59,200 × 100 = 1,105%

Or expressed as: 11x returnTime-Based ROI:

| Period | Investment | Revenue | ROI |

|---|---|---|---|

| Month 3 | $14,800 | $24,600 | 66% |

| Month 6 | $29,600 | $106,600 | 260% |

| Year 1 | $59,200 | $713,400 | 1,105% |

| Year 3 | $177,600 | $2,967,744* | 1,571% |

*Includes LTV from customers acquired in years 1-3

Why 3-Year View Matters: True value compounds over customer lifetime.

Building Your AI Search ROI Dashboard

Essential Dashboard Components

Section 1: Platform Performance

Metrics:

- Mention rate (trend chart)

- Share of voice (comparison chart)

- Sentiment score (gauge)

- Platform coverage (heatmap)

Update frequency: WeeklySection 2: Business Impact

Metrics:

- Brand search volume (trend)

- Organic traffic (trend)

- Lead volume (bar chart)

- Lead quality score (comparison)

Update frequency: WeeklySection 3: Revenue Attribution

Metrics:

- Customers acquired (monthly bars)

- Revenue generated (cumulative line)

- ROI (large number display)

- LTV by source (comparison)

Update frequency: MonthlySection 4: Competitive Context

Metrics:

- Your SOV vs. competitors

- Mention quality comparison

- Platform presence heatmap

Update frequency: MonthlyTools for Dashboard Building

Option 1: Google Data Studio / Looker Studio (Free)

- Connect Google Analytics

- Import spreadsheet data

- Create custom visualizations

Option 2: AmpliRank Dashboard (Built-in)

- Pre-built AI search metrics

- Automated data collection

- No setup required

Option 3: Tableau / Power BI (Enterprise)

- Advanced analytics

- Complex data sources

- Custom calculations

Proving ROI to Stakeholders

The Executive Summary Framework

One-Page ROI Report Template:

# AI Search Optimization ROI Report

Period: Q4 2024

## Investment

Total: $14,800

## Results

**Platform Performance**

- Mention rate: 12% → 28% (+133%)

- Share of voice: 15% → 27% (+80%)

- 4-platform presence achieved

**Business Impact**

- Brand searches: +62%

- Organic traffic: +34%

- Leads generated: 87 (+240% vs Q3)

- Lead quality: +28% MQL conversion rate

**Revenue**

- Customers acquired: 24

- Revenue: $196,800

- ROI: 1,230%

## Next Quarter Plan

- Expand to 1,000 query monitoring

- Launch original research initiative

- Target 35% mention ratePresentation Tips

For CFO/Finance:

- Lead with ROI number

- Show LTV:CPA ratios

- Compare to other channels

- Project 3-year value

For CEO:

- Market share implications

- Competitive positioning

- Brand awareness metrics

- Strategic advantage

For CMO:

- Full funnel impact

- Channel comparison

- Lead quality data

- Content performance

For Board:

- High-level metrics

- Competitive context

- Strategic importance

- Future investment ask

Advanced Attribution Techniques

Technique 1: Cohort Analysis

Track customers by acquisition month:

| Acquisition Month | Customers | 3-Mo Revenue | 6-Mo Revenue | 12-Mo Revenue |

|---|---|---|---|---|

| Jan 2024 | 12 | $98,400 | $147,600 | $246,000 |

| Feb 2024 | 15 | $123,000 | $184,500 | $307,500 |

| Mar 2024 | 18 | $147,600 | $221,400 | - |

Insight: Predict future value of recent customers.

Technique 2: Incrementality Testing

Measure true AI search impact:

Method:

- Pause AI search efforts for test market

- Continue in control market

- Compare results

Example:

Test market (AI paused):

- Organic traffic: -23%

- Brand searches: -31%

- New customers: -28%

Control market (AI continued):

- Organic traffic: +12%

- Brand searches: +18%

- New customers: +15%

Incremental impact: 40-45% of total growthTechnique 3: Customer Journey Mapping

Survey customers post-purchase:

"Which of these influenced your decision?"

(Check all that apply)

[ ] Saw brand mentioned by AI assistant

[ ] Searched for brand name on Google

[ ] Read reviews/comparisons

[ ] Visited website multiple times

[ ] Downloaded resources

[ ] Watched demo video

[ ] Spoke with salesAnalysis: Identify most common paths involving AI touchpoints.

Technique 4: Brand Lift Studies

Measure awareness before/after AI campaigns:

Process:

- Survey target audience (baseline)

- Run AI optimization for 90 days

- Survey again (post-campaign)

- Measure lift

Example Results:

Unaided brand awareness:

Before: 14%

After: 23%

Lift: +64%

Brand consideration:

Before: 22%

After: 35%

Lift: +59%Common ROI Measurement Mistakes

Mistake #1: Only Tracking Direct Attribution

Wrong: Only counting customers who say “I found you on ChatGPT” Right: Multi-touch attribution recognizing awareness-building

Reality: 83% of AI-influenced customers never mention AI as source in surveys, but it was their first touchpoint.

Mistake #2: Too Short Time Horizon

Wrong: Measuring ROI after 30 days Right: 6-12 month minimum, 3-year ideal

Why: AI search builds awareness and authority, which compounds over time.

Mistake #3: Ignoring Competitive Context

Wrong: Tracking your metrics in isolation Right: Measuring relative to competitors

Example:

- Your mention rate grew 15% (good!)

- But competitor grew 40% (you’re losing ground)

Mistake #4: Platform Performance Only

Wrong: Only tracking mentions and SOV Right: Connecting to business outcomes and revenue

Why: Executives care about revenue, not vanity metrics.

Mistake #5: No Baseline

Wrong: Starting tracking after optimization begins Right: Measure baseline before any efforts

Why: Can’t prove impact without before/after comparison.

Mistake #6: Attribution Overclaiming

Wrong: Attributing 100% of branded traffic to AI Right: Conservative attribution models

Why: Credibility matters. Better to under-promise and over-deliver.

Mistake #7: Inconsistent Tracking

Wrong: Sporadic measurement Right: Weekly platform metrics, monthly business metrics

Why: Need consistent data to identify trends and prove causation.

ROI Optimization Strategies

Strategy 1: Focus on High-ROI Content Types

Based on data, prioritize:

| Content Type | Avg. Cost | Citations | Cost per Citation | ROI |

|---|---|---|---|---|

| Original research | $12,000 | 189 | $63 | Very High |

| Pillar guides | $4,500 | 94 | $48 | High |

| Expert comparisons | $3,200 | 67 | $48 | High |

| How-to guides | $2,800 | 43 | $65 | Medium |

| Case studies | $3,500 | 34 | $103 | Medium |

| Standard blogs | $800 | 8 | $100 | Low |

Action: Shift budget toward research and pillar guides.

Strategy 2: Platform Prioritization

Measure ROI by platform:

ChatGPT:

- Investment: 35% of budget

- Mentions: 32% of total

- Attributed revenue: 28%

- ROI: 0.80x (underperforming)

Gemini:

- Investment: 30% of budget

- Mentions: 41% of total

- Attributed revenue: 44%

- ROI: 1.47x (outperforming)

Action: Reallocate 10% from ChatGPT to Gemini optimization.Strategy 3: Query Category Optimization

Double down on highest-converting query types:

| Query Type | Mention Rate | Conversion Rate | Revenue/Mention |

|---|---|---|---|

| ”[Category] for [use case]“ | 31% | 12% | $2,400 |

| ”Best [category]“ | 28% | 8% | $1,600 |

| ”How to choose [category]“ | 23% | 15% | $3,200 |

| ”[Competitor] alternative” | 15% | 18% | $4,100 |

Insight: “How to choose” and “alternative” queries convert best. Create more content targeting these.

Strategy 4: Competitive Arbitrage

Find undervalued opportunities:

Your SOV on Claude: 18%

Competitor A: 42%

Competitor B: 31%

But: Claude users have 2.3x higher LTV

Action: Heavily invest in Claude optimization (undervalued, high-value audience).Case Study: B2B SaaS AI Search ROI

Company: Mid-market project management SaaS Annual Revenue: $12M Investment: $65,000 over 12 months

Program Overview

Tactics:

- 6 comprehensive pillar guides (8,000+ words each)

- 2 original research reports (industry surveys)

- 18 supporting articles (3,000+ words)

- 4 expert comparison guides

- Quarterly content updates

- Link building campaign

- AmpliRank monitoring

Results: Platform Performance

| Metric | Before | After 12 Months | Change |

|---|---|---|---|

| Mention rate | 9% | 34% | +278% |

| Share of voice | 11% | 29% | +164% |

| Sentiment (positive) | 58% | 74% | +28% |

| Platform coverage | 2/4 | 4/4 | +100% |

Results: Business Impact

| Metric | Before | After 12 Months | Change |

|---|---|---|---|

| Brand searches | 3,200/mo | 8,900/mo | +178% |

| Organic traffic | 18,400/mo | 34,200/mo | +86% |

| Leads (monthly) | 67 | 187 | +179% |

| MQL conversion | 48% | 62% | +29% |

Results: Revenue

| Metric | Value |

|---|---|

| New customers (Year 1) | 142 |

| Average deal size | $8,900 |

| First-year revenue | $1,263,800 |

| Estimated 3-year LTV | $3,791,400 |

ROI Calculation

Investment: $65,000

Year 1 Revenue: $1,263,800

Year 1 ROI: 1,844%

3-Year Revenue (LTV): $3,791,400

3-Year ROI: 5,733%

Key Learnings:

1. Original research had 8.2x ROI

2. Gemini optimization outperformed other platforms

3. "Alternative to [competitor]" queries converted best

4. AI-influenced customers had 67% higher LTV

Recommendation: Increase investment to $95,000 in Year 2Conclusion: Making AI Search ROI Undeniable

Measuring AI search ROI requires moving beyond traditional metrics to capture the full customer journey—from awareness through conversion and lifetime value.

The key is building a measurement system that:

- Tracks platform performance (mentions, SOV, sentiment)

- Monitors business impact (awareness, traffic, leads)

- Attributes revenue (conservatively but accurately)

- Proves ROI (with hard numbers)

Your ROI Measurement Action Plan:

Week 1:

- Establish baseline metrics (measure before optimization)

- Set up tracking systems (analytics, surveys, CRM)

- Define attribution model

Month 1:

- Create ROI dashboard

- Begin weekly platform tracking

- Implement customer source surveys

Quarter 1:

- Calculate first ROI report

- Present to stakeholders

- Optimize based on data

Ongoing:

- Weekly platform metrics review

- Monthly business impact analysis

- Quarterly ROI reporting

- Annual strategy optimization

The brands that win in AI search are those that can prove its value. Start measuring today.

Ready to track the metrics that matter? Try AmpliRank free for 7 days and get instant visibility into your AI search performance, share of voice, and competitive position across ChatGPT, Gemini, Claude, and Perplexity.

Last updated: November 2024

See exactly where your brand ranks across ChatGPT, Gemini, Perplexity, and 10+ AI platforms. Start your free 7-day trial—no credit card required.